This post follows up on a Twitter thread I posted in November exploring ways of measuring the predictability of teams. I also discussed some of these ideas in a Drunk Agile episode.

When I begin working with an organisation on the agile transformation, an early conversation is around successful outcomes. My work on Strategy Deployment is all about answering the question “how do I know if agile is working?“

Sometimes the discussion is around delivering more quickly, (i.e. being more responsive to the business and customer needs). Other times it is about delivering more often (i.e. being more productive to deliver more functionality). Both of these are relatively easy to measure. Responsiveness can be tracked in terms of Lead Time, and productivity can be measured in terms of throughput.

However, the area that regularly gets mentioned that I’ve not found a good measure for yet is predictability. In other words, delivering work when it is expected to be delivered.

Say/Do

Before I get into a few ideas, let’s mention one option that I’m not a big fan of – the “say/do” metric. This is a measure of the ratio of planned work to delivered work in a timebox.

Firstly, this relies on time-boxed planning. This means it doesn’t work if you’re using more of a flow or pull-based process.

Secondly, the ratio is usually one of made-up numbers. Either story point estimates for Scrum or business value scores for SAFe’s Flow Predictability. This makes it far too easy to game, by either adjusting the numbers used or adjusting the commitment made. All it takes is to over-estimate and under-commit to make it look like you’re more predictable without actually making any tangible improvement.

System Stability

Another approach to predictability is to say that a system is either predictable, or it is not. With this frame, the concept of improving predictability is not valid. The idea builds on the work of Donald J. Wheeler, Walter A. Shewhart and W. Edwards Deming. These three statisticians would treat a system – in this case, a product delivery system – as either stable or unstable. An unstable system, with special cause variation, is unpredictable. By understanding and removing the special-cause variation, we are left with common-cause variation, and the system is now predictable.

For example, we can look at Lead Time data over time. For a stable system, WIP will be managed and Little’s Law will be adhered to. In this scenario, we can predict how long individual items take, by looking at percentiles. Different percentiles will give us different degrees of confidence. For the 90th percentile (P90), we can say that 90% of the time we will complete work within a known number of days.

With this approach, the tangible improvement work of making a system predictable is that of making the system stable. By removing the noise of special cause variation, we are able to make predictions on how long work will take and when it will be done.

Meeting Expectations

An alternative approach is to think of predictability as meeting expectations. Let’s assume I know my P90 Lead Time as described above. I know that there is only a 10% chance of delivering later than that time. However, there is still a 90% chance of delivering earlier than that time. Similarly, I might know my 10th percentile Lead Time (P10). This tells me that there is a 90% chance of delivering later, but only a 10% chance of delivering sooner.

If the distribution of the data is very wide, then there will be a wide range of possibilities. It is still difficult to predict a date between the “unlikely before” P10 date and the “unlikely after” P90 date. Thus it is difficult to set realistic expectations. Saying you might deliver anytime between 1 and 100 days is not being predictable. Julia Wester describes this well in her blog post on the topic using this diagram.

With this approach, the tangible improvement work is reducing the distribution of the data to remove the outliers.

Inequality

One way of measuring this variation of distribution is to simply look at the ratio of the P90 Lead Time to the P10 Lead Time. (Hat-tip to Todd Little for this suggestion). This is similar to how Income Inequality is measured. Thus if our P90 Lead Time is 100 days, and our P10 Lead Time is 5 days, was can say that our Lead Time Inequality is 20. However, if our P90 Lead Time is 50 and our P10 Lead Time is 25, our Lead Time Inequality is 2. We can say that the lower the Lead Time Inequality, the more predictable the system is.

Coefficient of Variation

Another way is to measure the coefficient of variation (CV), which gives a dimensionless measure of how close a distribution is to its central tendency (Hat-tip to Don Reinertsen for this suggestion). The coefficient of variation is the ratio of the standard deviation to the mean. A dataset with a wide variation would have a larger CV. A dataset of all equal values would have a CV of 0. Therefore, we can also say that the lower the Lead Time Coefficient of Variation, the more predictable the system is.

Consistency

There are probably other statistical ways of measuring the distribution, which cleverer people than me will hopefully suggest. What I think they have in common is that they are actually measuring consistency (Hat-tip to Troy Magennis for this suggestion). A wide distribution of Lead Times might be mathematically predictable, but they are not consistent with each other. A narrow distribution of Lead Times is more consistent with each other and thus allows for more reliable predictions.

Aging WIP

One risk with these measures of Lead Time consistency is that there are essentially two ways of narrowing the distribution. One is to look at lowering the upper bound and work to have fewer work items take a long time. This is almost certainly a good thing to do! The other is to consider increasing the lower bound and work to have more items take a short time. This is not necessarily a good thing to do! That raises a further question. How do we encourage more focus on decreasing long Lead Times and less on increasing short Lead Times?

The answer is to focus on the Work in Process and the age of that WIP. We can measure how long work has been in the process (started but not yet finished). This allows us to identify which work is blocked or stalled and get it moving again. Thus we can get it finished before it becomes too old. Measuring Aging WIP encourages tangible improvements by actively dealing with the causes of aged work. This might be addressing dependencies instead of just accepting them. Or it could be encouraging to break down large work items into smaller deliverables (right-sizing).

In summary, I believe that measuring Aging WIP and Blocked Time will lead to greater consistency of Lead Times with reduced Lead Time Inequality and Coefficient of Variation, which will, in turn, lead to better predictions of when work will be done.

Caveat

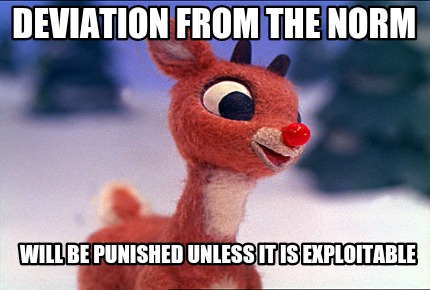

A couple of final warnings to wrap up. The first is that these are just ideas at this stage. I’m putting them out here for feedback and in the hope that others will try them as well as me. Secondly, I am not promoting eradicating all variation. The following quote and meme seem appropriate given the seasonal timing of this post!

Very well put, thank you Karl

[…] How to Measure the Predictability of Agile (Karl Scotland) […]

[…] How to Measure the Predictability of Agile (Karl Scotland) […]

Thanks for a thoughtful piece; if your clients are anything like mine (and I suspect they are!) the usual ask is “make us go faster” with a larger secondary ask of “make us more predictable.” Problem is, as you imply: predictability comes in many forms and the questions you ask is more important than the answer – its the “42 problem”, “Do you really understand the question?”

My gut fear is that most people asking the question are a long way from understanding any of the statistical formulations you suggest. Those like you and me who think about the questions can give very specific answers backed up by statistics.

My fear is, most people who ask the question have a gut feel for what “predictable” is and that gut feel doesn’t allow much space for statistics. Cynical me feels predictability is something of a Holly Grail, that doesn’t mean we can’t make improvements but it does mean that we will never achieve it.

[…] Source: How to Measure the Predictability of Agile […]

Measuring predictability involves comparing an estimate of resources against the actual. Studies have found that some

people consistently overestimate, while other consistently underestimate; including the person making the estimate

improves of a prediction model. Similarly, different kinds of activities seem to have an impact on the accuracy of

estimates.

The following paper contains a detailed analysis of Agile estimation data https://arxiv.org/abs/1901.01621

If people have project data, I’m happy to offer a free analysis, provided an anonymised version of the data can be made

public.

My book Evidence-based Software Engineering discusses what is currently known about software engineering, based on an

analysis of all the publicly available data.

pdf+code+all data freely available here http://knosof.co.uk/ESEUR/